ELT Data FAQs

How ELT Data works | Data security and privacy | Advanced features

How ELT Data works

ELT Data is hosted on Amazon Web Services (AWS) servers in the US.

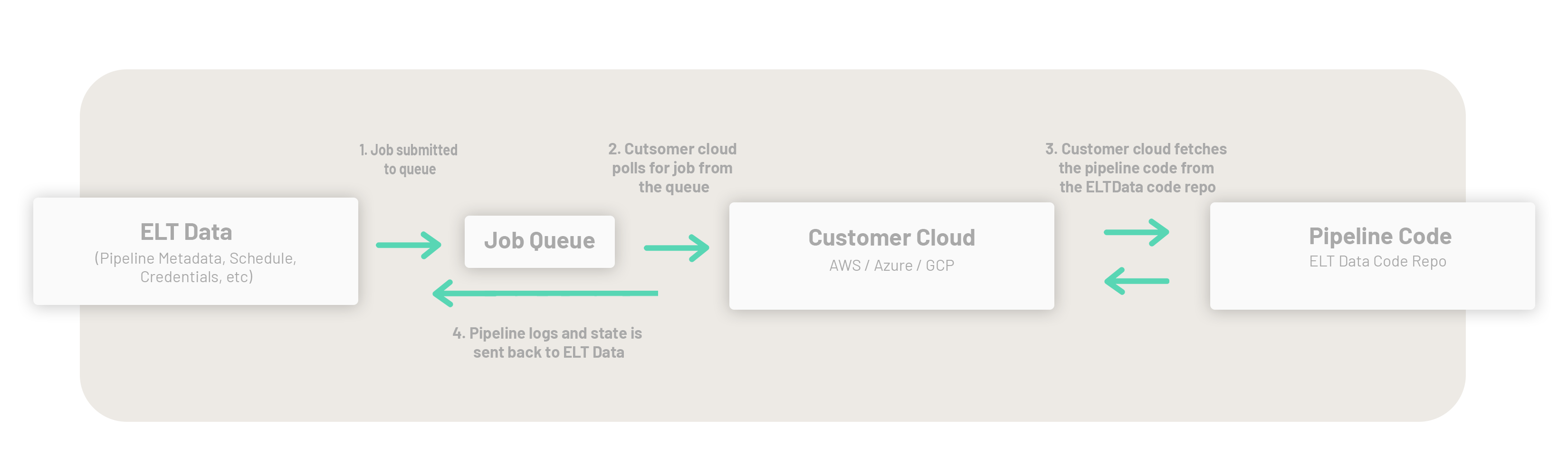

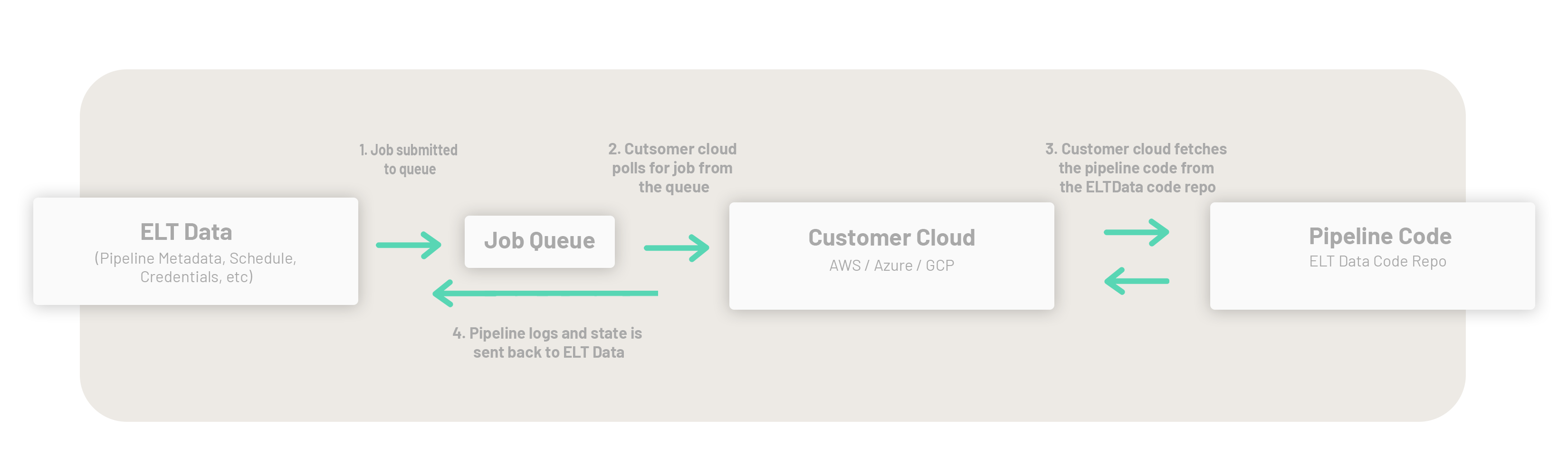

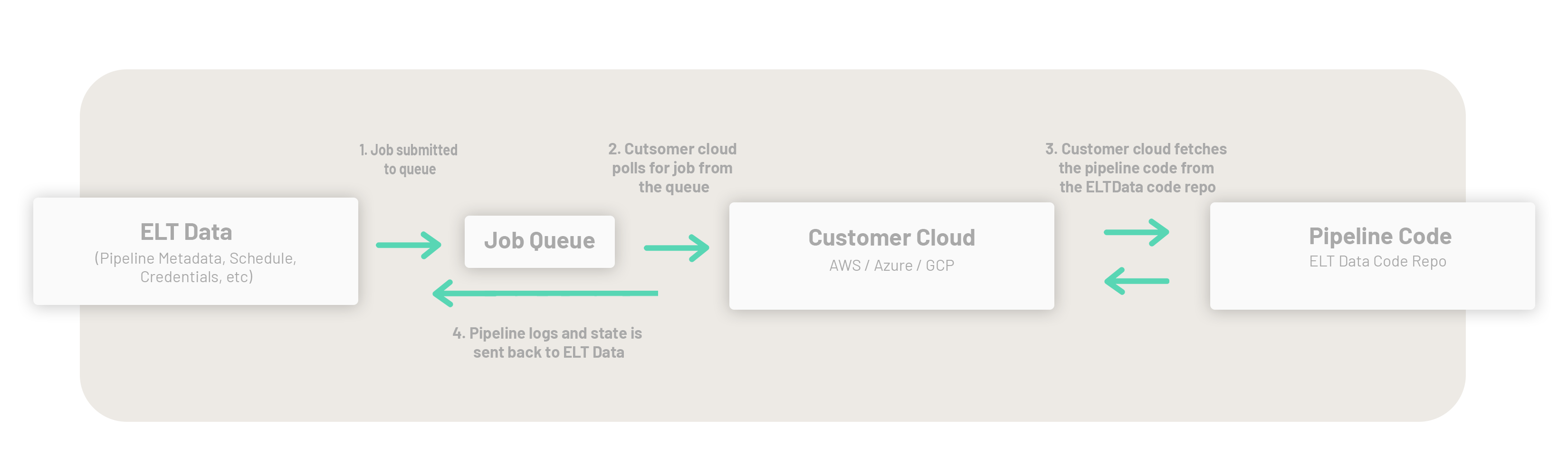

ELT Data manages your data pipelines from its cloud (AWS US East) while your pipelines are executed in your cloud. This way, your data remains in your cloud and is not exposed to ELT Data or any other external application.

ELT Data fetches data from your application by connecting to it using APIs or by reading CSV files exported to an email or SFTP folder.

No, you don't need to buy any IT infrastructure upfront. To run your data pipelines on ELT Data, you need a cloud account with AWS, Azure or GCP. This account will spin up servers on-demand to run data pipelines and shut them down after they are executed. It will also provision other resources like storage and security as defined by you. You can license and pay for these via monthly or annual plans.

No. We have tested and deployed data pipelines on tables with over 100 GB data and 30 million rows. ELT Data is designed to scale infrastructure as required.

You can set up and run as many data pipelines as you want. The number of data pipelines is only constrained by the parameters defined by your cloud provider.

ELT Data charges a fee per application connected. This fee has two components - upfront charge for onboarding and monthly charge for maintenance and support. We don't charge on the size of data ingested or the number of pipelines you run - these are part of your infrastructure costs that you directly pay your cloud provider.

By default, ELT Data creates a lakehouse in your cloud and follows the Medallion architecture for data management. Files are written in the delta format and pushed to the data warehouse of your choice. You can consume data directly from the lakehouse or from the warehouse. In addition to delta, ELT Data can provide data in Iceberg, Parquet or CSV formats in your lakehouse.

With ELT Data, your data never leaves your cloud. In addition, ELT data can generate data pipelines from an API specification which directly addresses the long tail of cloud-based business applications that are not served by other connectors.

The transformations in ELT Data are limited to deduping and flattening your data and encrypting selected columns. Business transformations, analytics and reporting are defined by your business needs and are not in the scope of ELT Data.

Data security and privacy

Yes, you need to grant limited permissions to ELT Data to spin up servers in your cloud. Please contact us or refer to the documentation on our website for more information.

Yes, all your credentials are secure with ELT Data. We have implemented security safeguards in ELT Data including data encryption, virtual network, Multi-Factor Authentication, detailed alerting and logging. For more details, see the ELT Data Information Security Guide on our website.

No, your credentials are encrypted and stored in the application database. They are not human readable when stored.

At all times, your data resides and moves within your environment - source applications, SharePoint, email and SFTP folders, destination storage. This storage and movement is governed by your information security policies. Access and authentication are governed by your policies too. With ELT Data, your data is as secure as you set it up to be.

Yes, ELT Data can encrypt the columns you select or can drop them from the final dataset.

No, ELT Data cannot view your business data. While ELT Data orchestrates data pipelines from its cloud, the data movement from your source application to storage destination is entirely within your cloud. ELT Data can only create and access pipeline setup and execution metadata.

Advanced features

ELT Data handles schema evolution automatically and tracks all the schema related changes. In addition, the changes to your data and schema can be rolled back to a defined restore point if required.

ELT Data runs the following three checks on all ingested data for every pipeline execution:

- Data freshness test to check when the data was last refreshed

- Primary key not null test

- Primary key uniqueness to ensure that there are no duplicates in the primary key.

In addition, ELT Data has automated retries, logging and alerts built in to make the data pipelines more robust.

By default, all data pipelines in ELT Data have a concurrency of 1, i.e. at a given time only one instance of the pipeline will run and the rest will be queued. In addition, the final data is written in delta format and it supports ACID compliance.

ELT Data supports the following API authentication mechanisms:

1. API Key

2. Basic Authentication

3. OAuth2

- Client Credentials

- Authorization Grant flow

In addition if your app requires custom authentication, ELT Data can be extended to support custom auth flows.

ELT Data is hosted on Amazon Web Services (AWS) servers in the US.

ELT Data manages your data pipelines from its cloud (AWS US East) while your pipelines are executed in your cloud. This way, your data remains in your cloud and is not exposed to ELT Data or any other external application.

ELT Data fetches data from your application by connecting to it using APIs or by reading CSV files exported to an email or SFTP folder.

No, you don't need to buy any IT infrastructure upfront. To run your data pipelines on ELT Data, you need a cloud account with AWS, Azure or GCP. This account will spin up servers on-demand to run data pipelines and shut them down after they are executed. It will also provision other resources like storage and security as defined by you. You can license and pay for these via monthly or annual plans.

No. We have tested and deployed data pipelines on tables with over 100 GB data and 30 million rows. ELT Data is designed to scale infrastructure as required.

You can set up and run as many data pipelines as you want. The number of data pipelines is only constrained by the parameters defined by your cloud provider.

ELT Data charges a fee per application connected. This fee has two components - upfront charge for onboarding and monthly charge for maintenance and support. We don't charge on the size of data ingested or the number of pipelines you run - these are part of your infrastructure costs that you directly pay your cloud provider.

By default, ELT Data creates a lakehouse in your cloud and follows the Medallion architecture for data management. Files are written in the delta format and pushed to the data warehouse of your choice. You can consume data directly from the lakehouse or from the warehouse. In addition to delta, ELT Data can provide data in Iceberg, Parquet or CSV formats in your lakehouse.

With ELT Data, your data never leaves your cloud. In addition, ELT data can generate data pipelines from an API specification which directly addresses the long tail of cloud-based business applications that are not served by other connectors.

The transformations in ELT Data are limited to deduping and flattening your data and encrypting selected columns. Business transformations, analytics and reporting are defined by your business needs and are not in the scope of ELT Data.

Yes, you need to grant limited permissions to ELT Data to spin up servers in your cloud. Please contact us or refer to the documentation on our website for more information.

Yes, all your credentials are secure with ELT Data. We have implemented security safeguards in ELT Data including data encryption, virtual network, Multi-Factor Authentication, detailed alerting and logging. For more details, see the ELT Data Information Security Guide on our website.

No, your credentials are encrypted and stored in the application database. They are not human readable when stored.

At all times, your data resides and moves within your environment - source applications, SharePoint, email and SFTP folders, destination storage. This storage and movement is governed by your information security policies. Access and authentication are governed by your policies too. With ELT Data, your data is as secure as you set it up to be.

Yes, ELT Data can encrypt the columns you select or can drop them from the final dataset.

No, ELT Data cannot view your business data. While ELT Data orchestrates data pipelines from its cloud, the data movement from your source application to storage destination is entirely within your cloud. ELT Data can only create and access pipeline setup and execution metadata.

Advanced features

ELT Data handles schema evolution automatically and tracks all the schema related changes. In addition, the changes to your data and schema can be rolled back to a defined restore point if required.

ELT Data runs the following three checks on all ingested data for every pipeline execution:

- Data freshness test to check when the data was last refreshed

- Primary key not null test

- Primary key uniqueness to ensure that there are no duplicates in the primary key.

In addition, ELT Data has automated retries, logging and alerts built in to make the data pipelines more robust.

By default, all data pipelines in ELT Data have a concurrency of 1, i.e. at a given time only one instance of the pipeline will run and the rest will be queued. In addition, the final data is written in delta format and it supports ACID compliance.

ELT Data supports the following API authentication mechanisms:

1. API Key

2. Basic Authentication

3. OAuth2

- Client Credentials

- Authorization Grant flow

In addition if your app requires custom authentication, ELT Data can be extended to support custom auth flows.

ELT Data handles schema evolution automatically and tracks all the schema related changes. In addition, the changes to your data and schema can be rolled back to a defined restore point if required.

ELT Data runs the following three checks on all ingested data for every pipeline execution:

- Data freshness test to check when the data was last refreshed

- Primary key not null test

- Primary key uniqueness to ensure that there are no duplicates in the primary key.

In addition, ELT Data has automated retries, logging and alerts built in to make the data pipelines more robust.

- Data freshness test to check when the data was last refreshed

- Primary key not null test

- Primary key uniqueness to ensure that there are no duplicates in the primary key.

In addition, ELT Data has automated retries, logging and alerts built in to make the data pipelines more robust.

By default, all data pipelines in ELT Data have a concurrency of 1, i.e. at a given time only one instance of the pipeline will run and the rest will be queued. In addition, the final data is written in delta format and it supports ACID compliance.

ELT Data supports the following API authentication mechanisms:

1. API Key

2. Basic Authentication

3. OAuth2

- Client Credentials

- Authorization Grant flow

In addition if your app requires custom authentication, ELT Data can be extended to support custom auth flows.

1. API Key

2. Basic Authentication

3. OAuth2

- Client Credentials

- Authorization Grant flow

In addition if your app requires custom authentication, ELT Data can be extended to support custom auth flows.